During my What’s New in ADO.NET 2.0 talk at Code Camp the attendees applauded the SqlDependency class.

"Great job" to Leonid Tsybert and everyone on the ADO.NET team!

http://www.AcehAid.org

During my What’s New in ADO.NET 2.0 talk at Code Camp the attendees applauded the SqlDependency class.

"Great job" to Leonid Tsybert and everyone on the ADO.NET team!

http://www.AcehAid.org

holy canoli.

remember when I compared using bulk copy to insert 104,000 records into another database? I compared it to dataadapter update using batch updating with the size set at 100.

The difference in November CTP (VS2005 and SQL Server 2005 full version) was 21 seconds for the bulk copy and 41 minutes for the update.

Out of curiousity, I just ran this again with the Feb CTP versions of vs2005 and sql server 2005.

The bulk copy was about the same. But the update using the same table and the same settings took only 8 minutes!

Wowee kazowee!

update: Pablo Castro recommends: “For maximum perf in batching, make sure you wrap the batched update around a transaction.“

When I uninstalled the November CTP of SQL Server 2005, I inadvertantly deleted my databases as well. When I installed the Feb bits and looked at the instructions for getting AdventureWorks back in there, I didn’t have the right files. So I trolled around the install disk, found the CAB file where the mdf and ldf were, extracted them, trimmed off the extra glob at the end of the files names and then attached. No problem. They are in [DISK]\Setup\Samples.cab.

The files are named AdventureWorks_data.mdf.EE9FA……… and AdventureWorks__Log.ldf.EE9FA…..

http://www.AcehAid.org

1) SqlDependency is throwing a wierd error just after the notification comes back from the sql server to the client: ObjectDisposedException – no stack trace, not happening within a method. This also effects the Web.Cache.SqlCacheDependency which uses the ado.net class (someone at MS is helping me with this one)

2) iXmlSerializable features of DataTable appear to be broken. I only tried two – passing a datatable from a web service (the webmethod that returns a datatable is getting serialized improperly) and DataTable.Merge (it just adds, does not merge)

3) Bulk Copy is throwing a bizarre error nowhere to be found in google. When I run the WriteToServer method it throws an exception quickly that says “Cannot access destination table ‘mytablename'”. I can’t see in the profiler what’s going on. Duh! One second after I posted this I remembered. It’s an INSERT! Previously I was deleting all rows from the destination table and then doing the bulk copy. This time I’m using a different table from AdventureWorks. I have to get the empty table in there first.

This is a bummer – 3 2 of my demos for my ado.net 2 talk are dead for now. But I knew what I was in for…

From Andrew Conrad’s post about DataSets and Null values:

[because the features are currently broken], the DataSet behavior WRT to nullable types will either be changed or not supported for RTM of VS 2005. However, it is very probable that it will be supported some time in the future.

see my previous post about Nullable Types and ADO.NET 2.0 to see why this interests me..

http://www.AcehAid.org

Perhaps, it’s true 🙂 (and hopefully just momentary…)

I have LOST MY MIND!

this works just fine:

Dim conn As SqlClient.SqlConnection = New SqlClient.SqlConnection(“server=myserver;Trusted_Connection=True;Database=pubs”)Dim cmd As SqlClient.SqlCommand = New SqlClient.SqlCommand(“select * from authors”, conn)Dim da As New SqlDataAdapter(cmd)Dim ds As New DataSetda.Fill(ds)

Dim ds2 As New DataSetDim t As DataTable = ds.Tables(0).Copyds2.Tables.Add(t)

what happened to the “DataTable belongs to another dataset“ exception? Was it only in 1.0 and I just never tried it again with 1.1? I know I struggled with this forever a long time ago.

oh do I need a vacation.

Rather than just searching for workarounds or whining about this problem in my blog, I have submitted a suggestion in the Product Feedback Center. If you think this will help you, go vote on it. If you think this is a stupid idea, go vote on it.

| |||||

Thanks. | |||||

http://www.AcehAid.org

I have written before about the wonderful new parameter of the DataView.ToTable method – DistinctRows.

Interestingly, the new DataTable that is created still has ties to the parent DataSet. So if you want to add it to another DataSet you will have to use the DataTable.Copy, rather than the new DataTable, itself.

(I have modified this post)

When you move a DataTable’s guts into another via DataTableReader, DataTable.CreateDataReader and DataTable.Load in ADO.NET 2.0, you don’t carry over the DataSet parent hook and therefore you can add this 2nd DataTable to another DataSet without having to worry about using Copy. Just a little itty bitty thing.

http://www.AcehAid.org

I mentioned in a previous post that the Batch updating process was changed with the current (November) bits of Whidbey to overcome a limitation in SQL Server – which is that you cannot have more than 2100 parameters in one query.

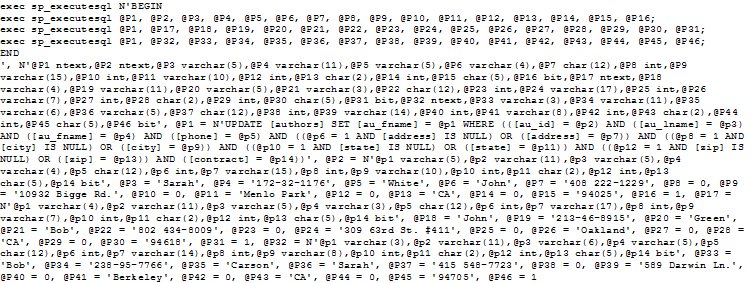

In response to a discussion in the newsgroups (ADO.NET 2.0 Batch Update), below is a screenshot from SQL Profiler with the earlier bits when I had set UpdateBatchSize=3.

It bunched together 3 queries into one big query. With 15 parameters per query, SQL Server was receiving 46 parameters.(the 45 parameters + the actual query string which was stuffed in to @P1).

In this case, I would be hitting the limit if I tried to send more than 140 rows.

In the new bits, if you watch the profiler, you will see one row being updated per query. So ADO.NET is now sending a group of individual queries in each batch, rather than one big huge query. I have sent batches of 10,000 rows at a time.

Example from Beta 1 October CTP – no longer true for future releases of .NET 2.0

Posted from BLInk!