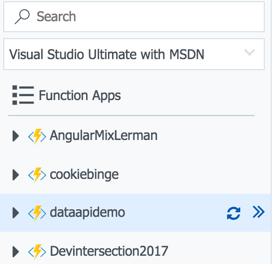

I’ve got an Azure Function App with some functions that I built for a demo for a conference session. I first used this at AngularMix and had named the function app AngularMix2017. Then when I did it for DevIntersection this past week, I recreated it as DevIntersection2017 because you can’t rename a function app. I’m doing it yet again next week at another conference (NetConf.co) and decided it was time to create it as a reusable name: DataApiDemo.

While you can’t flat out copy a function app if you are working strictly in the portal (which is how I’ve been working during my first learning stages of building function apps), you can easily enough duplicate it by downloading and uploading it again.

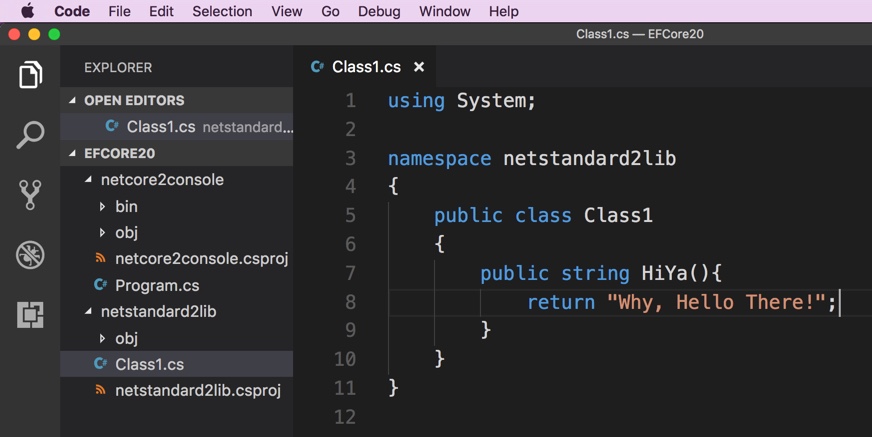

Keep in mind that if you are developing functions in VS 2017 or VS Code (combined with the Azure CLI) you can just use the tooling to publish the function apps along with the settings. But I don’t like to take the easy path. I learned a lot of new tricks and tools by going this route. Even if I leverage the tooling on my next go round, I’ve learned a lot which gives me a better understanding overall, more control and troubleshooting skills.

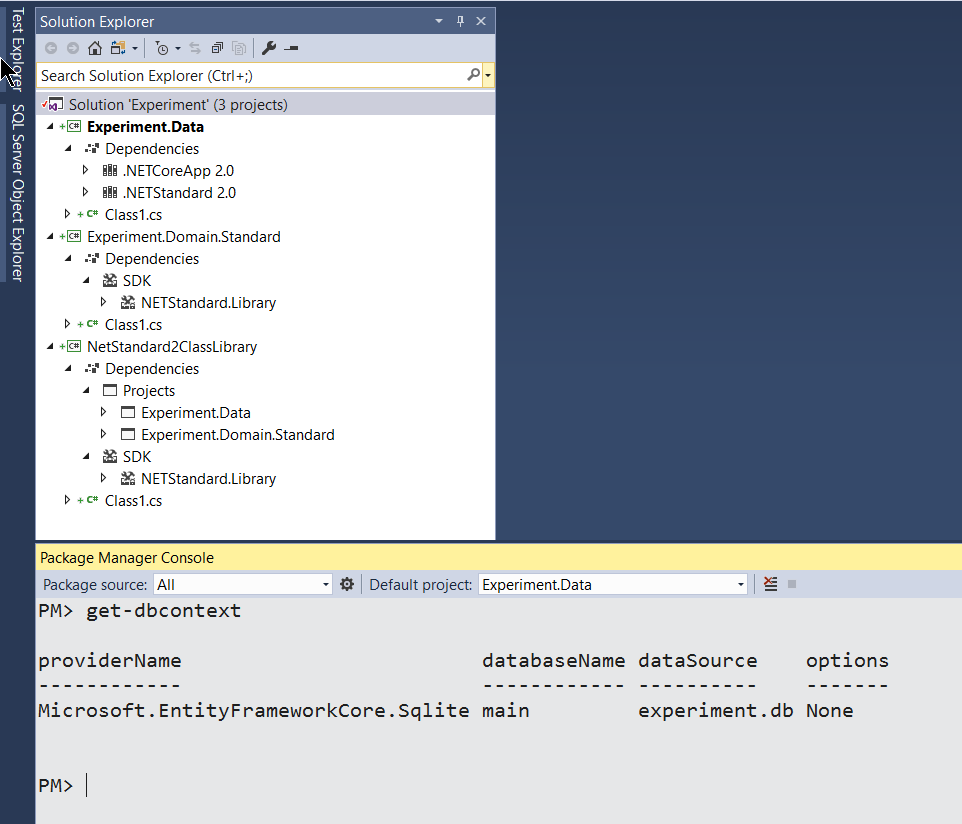

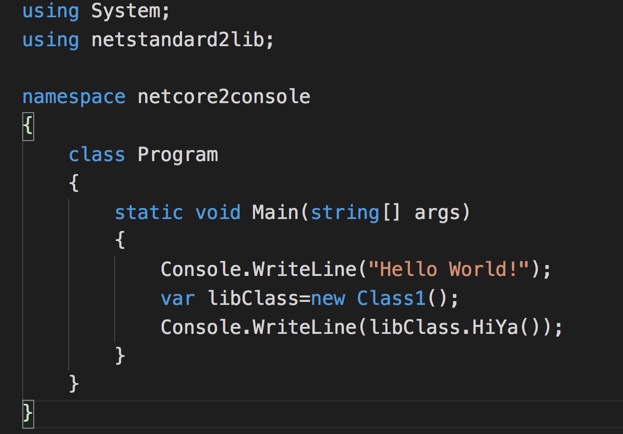

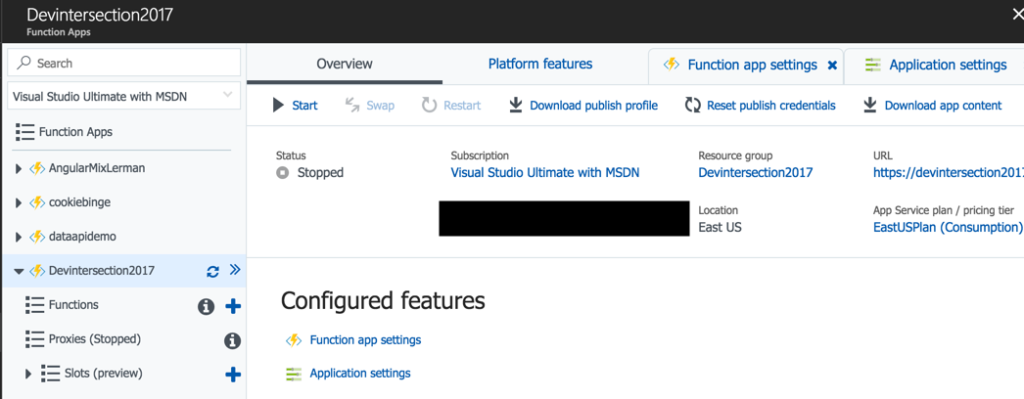

My first step was to create the new function app (dataapidemo).

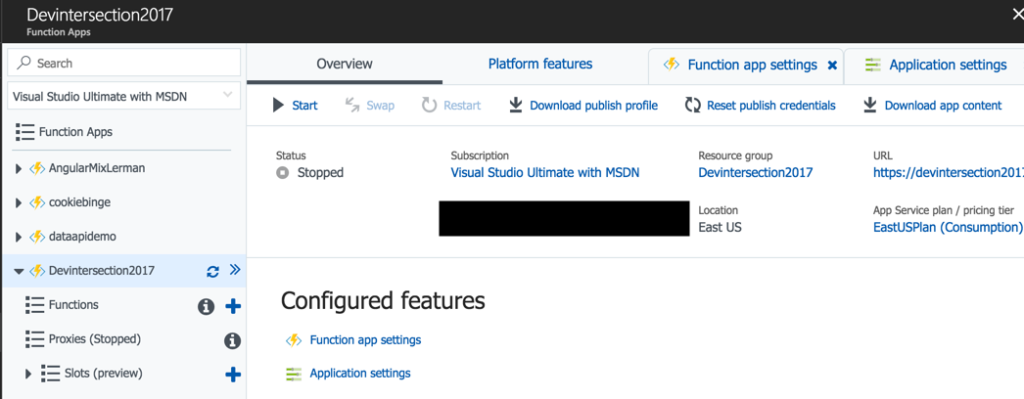

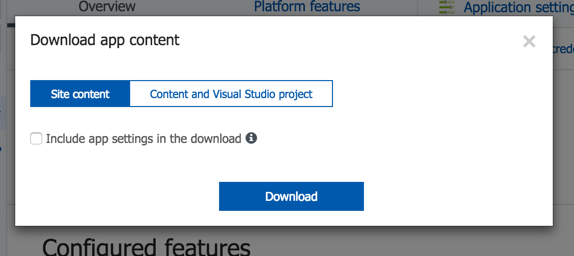

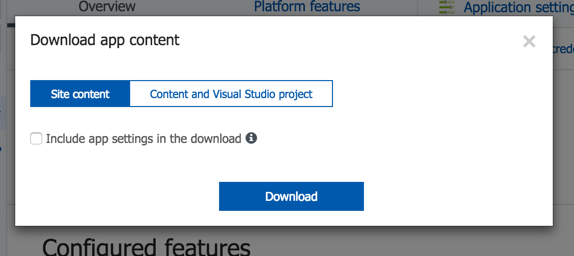

The Overview blade of an Azure function app has a “download app content” option. That will pull down all of the relevant files onto your machine. So next, I go to the overview of the DevIntersection2017 function app and click on the download app content link.

When downloading the app content, you have an option to also grab the app settings. I have a number of secrets stored in my settings ..things like keys to my databases , account ids and more. If you check that, the settings will get downloaded to a local settings file. If you were using this feature to continue your development locally, e.g. in VS2017, then you’ll want them. But for my scenario, they are not useful and even potentially a security issue (you’ll see shortly) so I’m going to leave that unchecked.

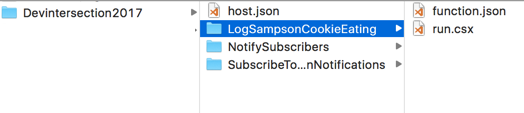

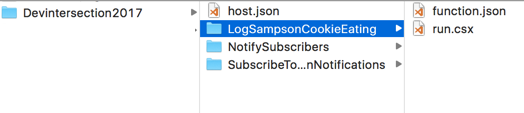

I can see the files in the designated folder on my computer.

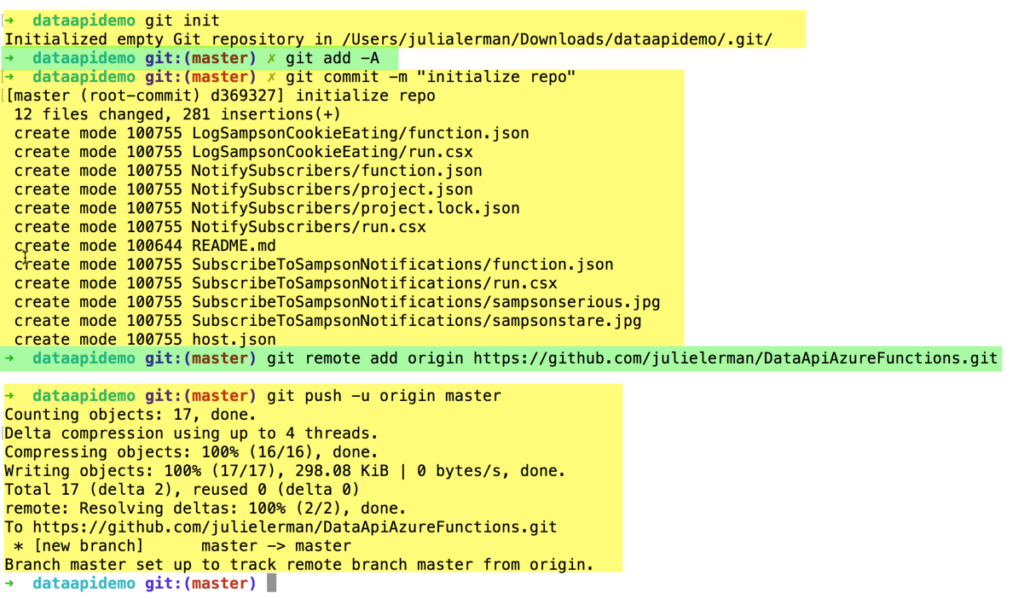

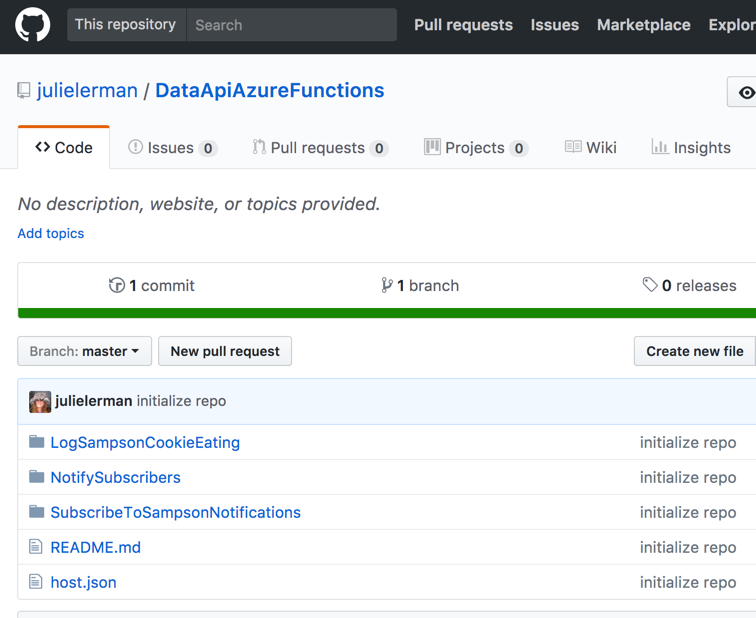

I’ve already created a Github repository to store these files in: https://github.com/julielerman/DataApiAzureFunctions.

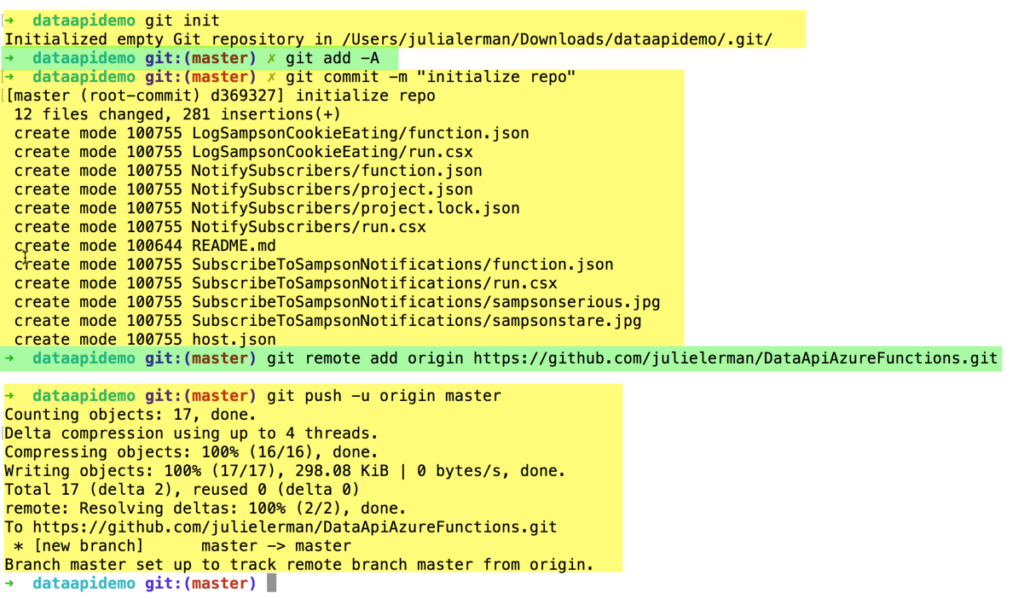

At the command line in the new folder, I’ll git init, add all of the files into the local repo and commit them. Then I can run the git commands to connect that folder to my GitHub repo and then push my local repository into the online repository.

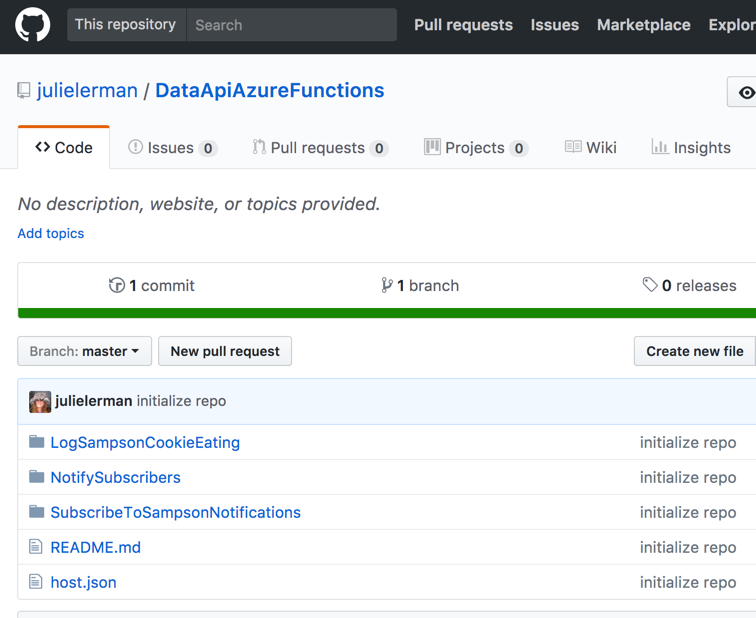

When that’s complete, the files are all in my GitHub repo.

Since I’m using a public repository, this is why I didn’t want the local.settings.json file filled with my secrets to download. Of course I could have deleted it locally or told Git to ignore that file. A few other points about this. If I were developing this locally, then I can have that file locally and use VS2017 or Azure CLI tools to publish my app along with the settings to the portal. But that is not my goal here.

Now I can take advantage of a long-time feature of the Azure Portal: connect it to a repository to auto-deploy from the repository to my app (in the case to my function app).

Back in the portal, I select the new function app again, then from its Platform Features page, open the Deployment Options. Choose Setup new deployment and follow the steps to connect the function app to your repository. When you’ve completed the setup, you can use the Sync button to force the deployment to happen. All of the files are now in my new dataapidemo function, including the function.json files that contain the function setting (e.g. integrations etc) and any other files I may have added to my functions.

Because I am using Twilio, one of my functions has a reference to Twilio in a project.json file and I need to restore that package to this function app. I did that by opening up the project.json and making a small mod to it..adding or removing a blank line, then saving it. That triggers the package restore to happen.

What’s not there however are the function app settings. These are settings that are defined to the app and used by one or more of the functions. There are some default settings created by Azure but I also have some others …those secrets, such as the account and telephone number for my Twilio integration as well as a key for the function.

Some of these settings are my own custom settings that I want in the new function. I could add them in via the portal app settings interface, but I don’t like that path when I have a slew of settings.

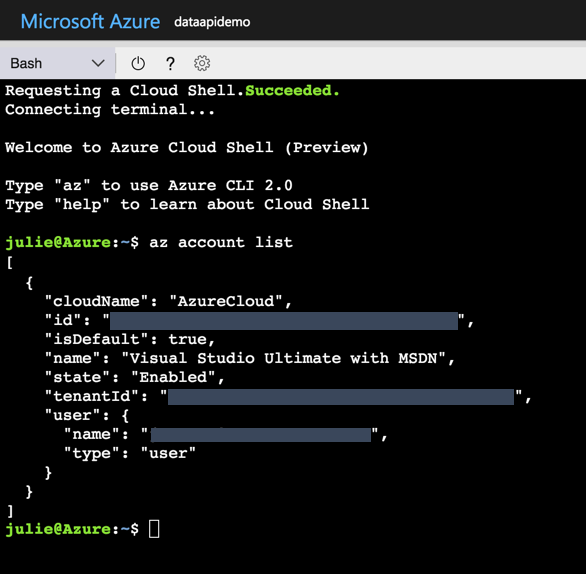

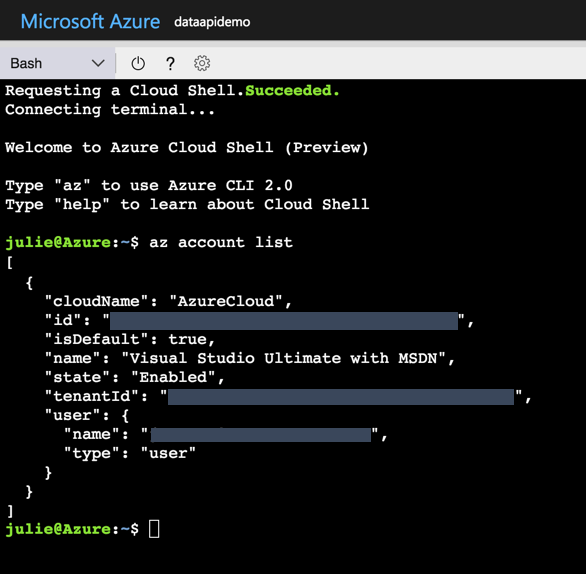

That means moving on to yet another amazing tool in the Azure Portal — Cloud Shell. Here you can run Bash or Powershell commands from the Azure CLI directly on services in your subscription. You can also do this locally on your machine with the extra caveat that there you must provide credentials and other metadata.

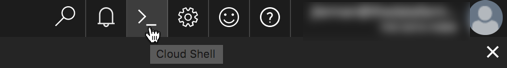

In the portal, open the Cloud Shell. This is gives you an interactive terminal window.

If you have multiple subscriptions, you want to be sure that it’s set to the one where your app function is stored.

You can do this by listing the subscriptions with the az account list command.

If the one you want is not default, then set it with:

az account set -s [ID]

This shell can do auto-complete so if the id starts with a123, you can type

az account set -s a123

then hit the tab and the id will get finished . Hit enter and it becomes the default subscription to work in.

I’ll use the azure functionapp commands to get the settings into my function app.

First I’ll list the existing settings. You do this with the command

az functionapp config appsettings list --name [functionname] --resource-group [resource name]

The parameter shortcuts for name and resource-group are -n and -g. Mine are both the same name: dataapidemo. Here’s what the command looks like (except for the wrapping that my blog is forcing):

az functionapp config appsettings list -n dataapidemo -g dataapidemo

The appsettings are listed out as JSON by default although you can affect the output format with the output parameter.

I’ll need to see the settings I want to copy from the other function app so I run:

az functionapp config appsettings list -n devintersection2017 -g devintersection2017 -o tsv

which give me the list of settings from which to choose.

In addition to listing the settings, you can add new ones with the set verb and delete with delete verb. Both would go in place of the verb list.

You use SET by combining the SET command and the –settings parameter. After the –settings parameter, you can list one or more setting with this format:

az functionapp config appsettings set -n dataapidemo -g dataapidemo --settings "property1name=value", "property2name=value"

Note that this is wrapping here on the blog post but not in the shell window.

So I added the handful of settings I wanted to add which looked more like:

az functionapp config appsettings set -n dataapidemo -g dataapidemo --settings "TwilioSID=myvalue", "TwilioAccount=myvalue"

There were others I had to add. For example, my function needs to know how to get to my Cosmos DB document database, so it has a setting that uses the db name as the property name and the dbs account endpoint as the value.

Once I had done that, I was able to get rid of the function apps that were named for each conference and use a common one no matter where I am sharing it.

And boyohboy did I learn a lot of new things!