read this and then this post to understand the problem.

Well … I started out trying this against pubs and it didn’t work and then I tried it with AdventureWOrks and it didn’t work. I focused on pubs and made a lot of changes without it working. Then I finally went back to AdventureWorks and it did work!! So I’m not sure exactly which thing I changed previously, but the to get pubs to work with query notification was in the DataBase, I needed to set the Database Properties/Options/Miscellaneous: “Database Compatibility Level” to Version90. It had been at Version80.

Note that all of the other Miscellaneous options for pubs were set to false and I did not have to change them for it to work.

Wally McClure and I talked about some of the issues about when to make particular calls. I found that the samples in the docs and Bob Beauchamin’s article were fine in terms of creating the connection, creating the command, opening the connection, creating the dependency, creating an event handler, etc worked fine.

Here are some things that I was unsure about.

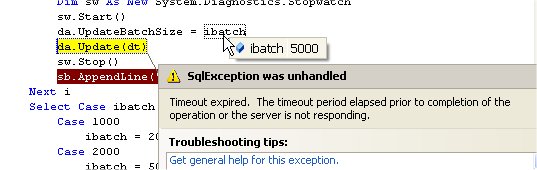

All of the samples I saw showed doing a read on the SqlDataReader that was returned by the command that I attached the dependency to. So I started out with this code (which works):

SqlDataReader rdr=cmd.ExecuteReader();

while (rdr.Read())

Console.WriteLine(rdr[0]);

rdr.Close();

Once I had that working, I did what I really wanted to do which was return the SqlDataReader to my form (in real life, maybe a middle tier) which then loaded it into a DataTable and did something with the DataTable. So I changed the code to:

SqlDataReader rdr=cmd.ExecuteReader();

return rdr;

and that worked just fine. So according to this test it is NOT necessary to do a read on the SqlDataReader for the notification to fire.

The placement of the Open method of the connection did not seem to affect the notification query. Of course, just so long as it’s before you try to execute the command. I tried it before creating the dependency and I tried it after the dependency and event handlers were already created. This makes perfect sense to me. But it was something I was worrying about when things weren’t working. So that eases my mind to know that my instincts are okay.

So there were NOT a gazillion other things I had to do in SQL Server besides the frequently advertised ones.

1. Be sure the CLR is enabled in SQL Server

the tsql for that is

EXEC sp_configure ‘show advanced options’, ‘1’

go

RECONFIGURE

go

EXEC sp_configure ‘clr enabled’, 1

go

RECONFIGURE

2. Make sure that Enable_Broker is ON in your database

Wally explains how here

3. Make sure that SQLNotification will send messages with this TSQL statement

GRANT SEND send on service::SqlQueryNotificationService to guest

a) note that the word SqQueryNotificationService is case sensitive

b) you have to have admin priveleges in order to successfully run this command

4. The database user needs permission to subscribe to notification

GRANT SUBSCRIBE QUERY NOTIFICATIONS TO [the user]

–that is, whatever user the calling application is that is making requests. I haven’t tried ASP.NET yet, but I’m guessing that will be the Network Service account if IIS6 is your webserver.

5. Follow the query rules.

You have to write a query that can be parsed and that needs to follow some very specific rules. The three most notable are

a) The projected columns in the SELECT statement must be explicitly stated (i.e. select id, name, lastname from mytable not select * from mytable)

b)Table names must be qualified with two-part names (eg dbo.authors or person.contact)

c) Due to the above one, this means that all tables referenced in the statement must be in the same database.

I posted a full list here

Even though I fiddled for 2 days with this, I think the above represents the right combination of things to attend to.

The last caveat is that this is still a beta. From reading Bob’s article, we can rest assured that MS is going to simplify this stuff. As for limiting your queries to “hello world“ applications (I’m exaggerating… but see Roman’s comment) maybe they’ll make it smarter or maybe the more full blown notification services will be the way to go. I don’t really know – that’s just a completely wild guess.

Posted from BLInk!